Content small amounts remains to be actually a controversial subject matter worldwide of on the web media. New rules and also social worry are actually probably to maintain it as a concern for years to follow. However weaponised AI and also various other specialist breakthroughs are actually creating it ever before harder to take care of. A start-up away from Cambridge, England, got in touch with Complete AI feels it has actually arrived on a much better technique to deal with the small amounts difficulty — by utilizing a “multimodal” technique to aid analyze web content in the absolute most complicated tool of all: video clip.

Today, Unitary is actually revealing $15 thousand in moneying to capitalise on energy it’s been actually viewing in the marketplace. The Collection A — led through best International VC Creandum, along with involvement additionally coming from Paladin Resources Team and also Plural — happens as Unitary’s company is actually increasing. The lot of video recordings it is actually identifying has actually hopped this year to 6 million/day coming from 2 thousand (dealing with billions of photos) and also the system is actually right now adding additional foreign languages past English. It dropped to reveal titles of consumers however states ARR is actually right now in the thousands.

Unitary is using the funding to expand into more regions and to hire more talent. Complete is actually not disclosing its valuation; it previously raised under $2 million and a further $10 million in seed funding; other investors include the likes of Carolyn Everson, the ex-Meta exec.

There have been dozens of startups over recent years harnessing different aspects of artificial intelligence to build content moderation tools.

And when you think about it, the sheer scale of the challenge in video is an apt application for it. No army of people would alone ever be able to parse the tens and hundreds of zettabytes of data that being created and shared on platforms like YouTube, Facebook, Reddit or TikTok — to say nothing of dating sites, gaming platforms, videoconferencing tools, and other places where videos appear, altogether making up more than 80% of all online traffic.

That angle is also what interested investors. “In an online world, there’s an immense need for a technology-driven approach to identify harmful content,” said Christopher Steed, chief investment officer, Paladin Capital Group, in a statement.

Still, it’s a crowded space. OpenAI, Microsoft (making use of its own AI, not OpenAI’s), Hive, Active Fence / Spectrum Labs, Oterlu (now part of Reddit), and Sentropy (now a part of Discord), and Amazon’s Rekognition are just a few of the many out there in use.

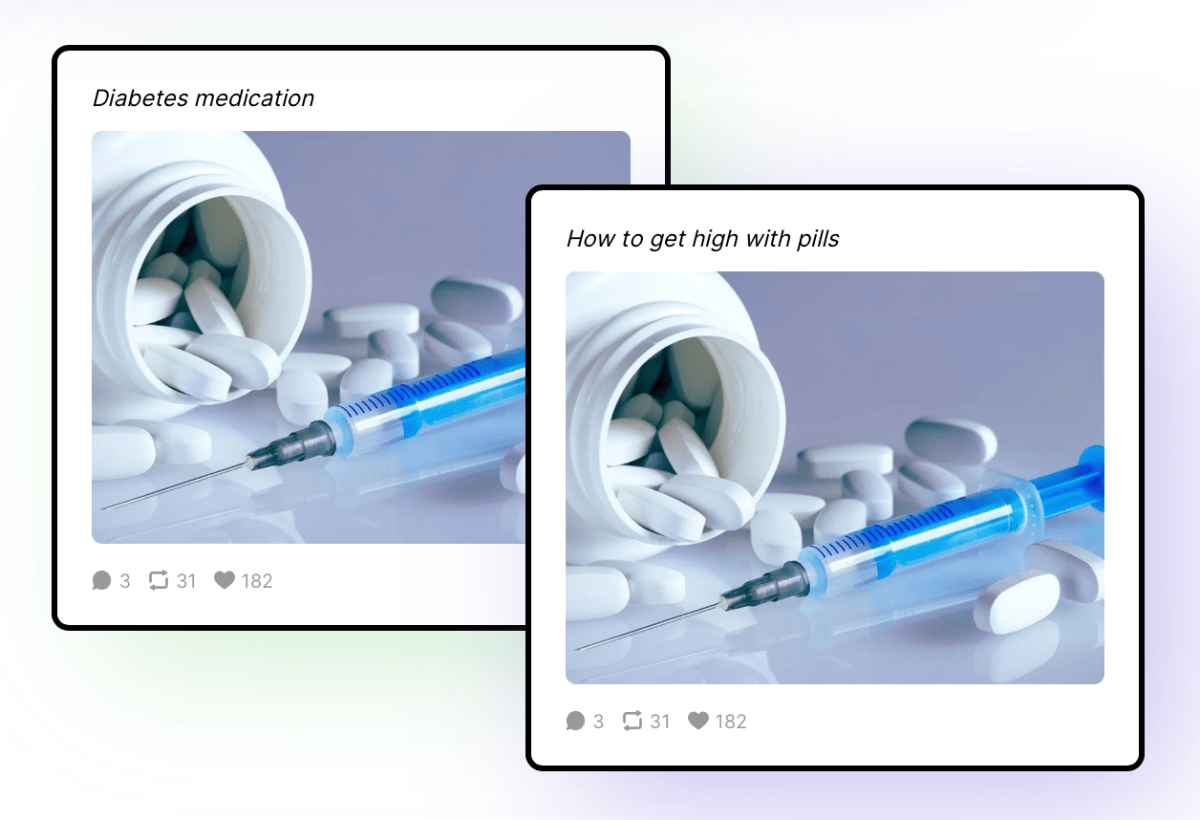

From Unitary AI’s point of view, existing tools are not as effective as they should be when it comes to video. That’s because tools have been built up to now typically to focus on parsing data of one type or another — say, text or audio or image — but not in combination, simultaneously. That leads to a lot of false flags (or conversely no flags).

“What is innovative about Unitary is that we have genuine multimodal models,” CEO Sasha Haco, who cofounded the company with CTO James Thewlis. “Rather than analyzing just a series of frames, in order to understand the nuance and whether a video is [for example] artistic or violent, you need to be able to simulate the way a human moderator watches the video. We do that by analysing text, sound and visuals.”

Customers put in their own parameters for what they want to moderate (or not), and Haco said they typically will use Unitary in tandem with a human team, which in turn will now have to do less work and face less stress.

“Multimodal” moderation seems so obvious; why hasn’t it been done before?

Haco said one reason is that “you can get quite far with the older, visual-only model”. However, it means there is a gap in the market to grow.

The reality is that the challenges of content moderation have continued to dog social platforms, games companies and other digital channels where media is shared by users. Lately, social media companies have signalled a move away from stronger moderation policies; fact checking organizations are losing momentum; and questions remain about the ethics of moderation when it comes to harmful content. The appetite for fighting has waned.

But Haco has an interesting track record when it comes to working on hard, inscrutable subjects. Before Unitary AI, Haco — who holds a PhD in quantum physics — worked on black hole research with Stephen Hawking. She was there when that team captured the first image of a black hole, using the Event Horizon Telescope, but she had an urge to shift her focus to work on earthbound problems, which can be just as hard to understand as a spacetime gravity monster.

Her “ephiphany,” she said, was that there were so many products out there in content moderation, so much noise, but nothing yet had equally matched up with what customers actually wanted.

Thewlis’s expertise, meanwhile, is directly being put to work at Unitary: he also has a PhD, his in computer vision from Oxford, where his speciality was “methods for visual understanding with less manual annotation.”

(‘Unitary’ is a double reference, I think. The startup is unifying a number of different parameters to better understand videos. But also, it may refer to Haco’s previous career: unitary operators are used in describing a quantum state, which in itself is complicated and unpredictable — just like online content and humans.)

Multimodal research in AI has been ongoing for years. But we seem to be entering an era where we are going to start to see a lot additional applications of the concept. Case in point: Meta just last week referenced multimodal AI several times in its Connect keynote previewing its new AI assistant tools. Unitary thus straddles that interesting intersection of cutting edge-research and also real-world application.

“We first met Sasha and James two years ago and have been incredibly impressed,” said Gemma Bloemen, a principal at Creandum and board member, in a statement. “Complete has emerged as clear early leaders in the important AI field of content safety, and also we’re so excited to back this exceptional team as they continue to accelerate and innovate in satisfied classification technology.”

“From the start, Unitary had some of the most powerful AI for classifying harmful content. Already this year, the company has accelerated to 7 figures of ARR, almost unheard of at this early stage in the journey,” said Ian Hogarth, a partner at Plural and also additionally a panel participant.