Keeping up along with a sector as fast-moving as AI is actually an uphill struggle. Therefore up until an AI may do it for you, listed below’s an useful summary of latest accounts around the world of artificial intelligence, alongside remarkable study as well as practices our experts didn’t deal with by themselves.

Last week during the course of its own yearly Link association, Meta released a range of brand new AI-powered chatbots throughout its own message applications — WhatsApp, Carrier as well as Instagram DMs. Offered for pick individuals in the U.S., the crawlers are actually tuned to carry particular characters as well as resemble famous personalities consisting of Kendall Jenner, Dwyane Wade, MrBeast, Paris Hilton, Charli D’Amelio as well as Snoop Dogg.

The crawlers are actually Meta’s most up-to-date quote to increase involvement throughout its own family members of systems, specifically one of a much younger market. (Depending on to a 2022 Seat Proving ground study, merely concerning 32% of net individuals aged thirteen to 17 mention that they ever before make use of Facebook, an over-50% decrease coming from the year prior.) However the AI-powered characters are actually likewise an image of a more comprehensive pattern: the expanding appeal of “character-driven” AI.

Consider Character.AI, which provides adjustable AI friends along with unique characters, like Charli D’Amelio as a dancing fanatic or even Chris Paul as a professional golf enthusiast. This summertime, Character.AI’s mobile phone application drew in over 1.7 thousand brand new installs in lower than a full week while its own internet application was actually covering 200 thousand brows through monthly. Character.AI declared that, in addition, since Might, individuals were actually investing in typical 29 moments every go to — a design that the business claimed shrouded OpenAI’s ChatGPT through 300% as ChatGPT consumption decreased.

That virality brought in endorsers consisting of Andreessen Horowitz, that put more than $one hundred thousand in financial backing right into Character.AI, which was actually final valued at $1 billion.

Elsewhere, there’s Replika, the questionable AI chatbot system, which in March possessed around 2 thousand individuals — 250,000 of whom were actually spending clients.

That’s as well as Inworld, yet another AI-driven personality excellence tale, which is actually cultivating a system for developing a lot more powerful NPCs in computer game as well as various other involved adventures. To day, Inworld hasn’t discussed a lot in the technique of consumption metrics. However the assurance of additional meaningful, natural personalities, steered through artificial intelligence, has actually landed Inworld expenditures coming from Disney as well as gives coming from Fortnite as well as Unreal Motor designer Legendary Gamings.

So accurately, there’s one thing to AI-powered chatbots along with characters. However what is it?

I’d bet to mention that chatbots like ChatGPT as well as Claude, while without a doubt valuable in distinctly specialist situations, don’t keep the exact same appeal as “personalities.” They’re certainly not as appealing, honestly — as well as it’s not a surprise. General-purpose chatbots were actually developed to finish details jobs, certainly not support an elivening discussion.

But the question is, will AI-powered characters have staying power? Meta’s certainly hoping so, considering the resources it’s pouring into its new bot collection. I’m not sure myself — as with most tech, there’s a decent chance the novelty will wear off eventually. And, then it’ll be onto the next big thing — whatever that ends up being.

Here are some other AI stories of note from the past few days:

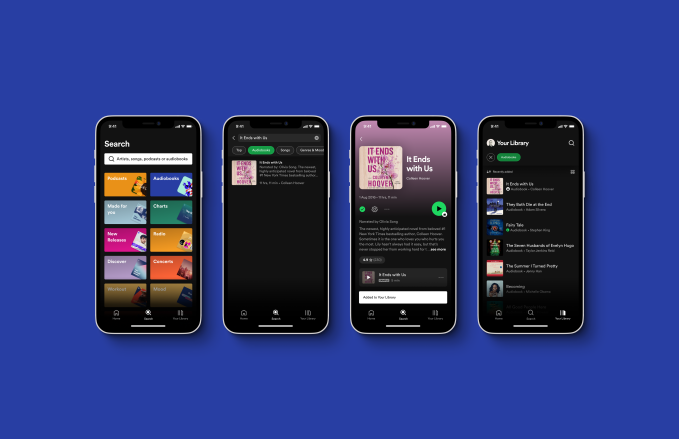

- Spotify tests AI-generated playlists: References discovered in the Spotify app’s code indicate the company may be developing generative AI playlists users could create using prompts, Sarah reports.

- How much are artists making from generative AI? Who knows? Some generative AI vendors, like Adobe, have established funds and revenue sharing agreements to pay artists and other contributors to the data sets used to train their generative AI models. However it’s not clear how much these artists can actually earn, TC learned.

- Google expands AI-powered search: Google opened up its generative AI search experience to teenagers and introduced a new feature to add context to the content that users see, along with an update to help train the search experience’s AI model to better detect false or offensive queries.

- Amazon launches Bedrock in GA, brings CodeWhisperer to the enterprise: Amazon announced the general availability of Bedrock, its managed platform that offers a choice of generative AI models from Amazon itself and third-party partners through an API. The company also launched an enterprise tier for CodeWhisperer, Amazon’s AI-powered service to generate and suggest code.

- OpenAI entertains hardware: The Information reports that storied former Apple product designer Jony Ive is actually in talks with OpenAI CEO Sam Altman about a mysterious AI hardware project. In the meantime, OpenAI — which is planning to soon release a more powerful version of its GPT-4 model with image analysis capabilities — could see its secondary-market valuation soar to $90 billion.

- ChatGPT gains a voice: In other OpenAI news, ChatGPT evolved into much more than a text-based search engine, with OpenAI announcing recently that it’s adding new voice and image-based smarts to the mix.

- The writers’ strike and AI: After almost five months, the Writers Guild of America reached an agreement with Hollywood studios to end the writers’ strike. During the historic strike, AI emerged as a key point of contention between the writers and studios. Amanda breaks down the relevant new contract provisions.

- Getty Images launches an image generator: Getty Images, one of the largest suppliers of stock images, editorial photos, videos and music, launched a generative AI art tool that it claims is “commercially safer” than other, rival solutions on the market. Prior to the launch of its own tool, Getty had been a vocal critic of generative AI products like Stable Diffusion, which was trained on a subset of its image content library.

- Adobe brings gen AI to the web: Adobe officially launched Photoshop for the web for all users with paid plans. The web version, which was in beta for almost two years, is now available with Firefly-powered AI tools such as generative fill and generative expand.

- Amazon to invest billions in Anthropic: Amazon has agreed to invest up to $4 billion in the AI startup Anthropic, the two firms said, as the e-commerce group steps up its rivalry against Microsoft, Meta, Google and Nvidia in the fast-growing AI sector.

More machine learnings

When I was talking with Anthropic CEO Dario Amodei about the capabilities of AI, he seemed to think there were no hard limits that we know of — not that there are none whatsoever, but that he had yet to encounter a (reasonable) problem that LLMs were unable to at least make a respectable effort at. Is it optimism or does he know of what he speaks? Only time will tell.

In the meantime, there’s still plenty of research going on. This project from the University of Edinburgh takes neural networks back to their roots: neurons. Not the complex, subtle neural complexes of humans, but the simpler (yet highly effective) ones of insects.

Ants and other small bugs are remarkably good at navigating complex environments, despite their more rudimentary vision and memory capabilities. The team built a digital network based on observed insect neural networks, and found that it was able to successfully navigate a small robot visually with very little in the way of resources. Systems in which power and size are particularly limited may be able to use the method in time. There’s always something to learn from nature!

Color science is another space where humans lead machines, more or less by definition: we are constantly striving to replicate what we see with better fidelity, but sometimes that fails in ways that in retrospect seem predictable. Skin tone, for example, is imperfectly captured by systems designed around light skin — especially when ML systems with biased training sets come into play. If an imaging system doesn’t understand skin color, it can’t expose and adjust the exposure and color properly.

Sony is aiming to improve these systems with a new metric for skin color that more comprehensively but efficiently defines it using a color scale as well as perceived light/dark levels. In the process of doing this they showed that bias in existing systems extends not just to lightness but to skin hue as well.

Speaking of fixing photos, Google has a new technique almost certainly destined (in some refined form) for its Pixel devices, which are heavy on the computational photography. RealFill is a generative plug-in that can fill in an image with “what should possess been there.” For instance, if your best shot of a birthday party happens to crop out the balloons, you give the system the good shot plus some others from the same scene. It figures out that there “should” be some balloons at the top of the strings and adds them in using information from the other pictures.

It’s far from perfect (they’re still hallucinations, just well informed hallucinations) but used judiciously it could be a really helpful tool. Is it still a “real” photo though? Well, let’s not get into that just now.

Lastly, machine learning models may prove more accurate than humans in predicting the number of aftershocks following a big earthquake. To be clear (as the researchers emphasize), this isn’t about “predicting” earthquakes, but characterizing them accurately when they happen thus that you can tell whether that 5.8 is the type that leads to three more minor quakes within an hour, or only one more after 20 minutes. As well as the latest models are still only decent at it, under specific circumstances — but they are not wrong, and they can work thorough large amounts of data quickly. In time these models may help seismologists better predict quakes and aftershocks, but as the scientists note, it’s far more important to be actually prepared; after all, even knowing one is coming doesn’t stop it coming from taking place.